Why the AGI race may be bigger near-term existential risk than misalignment

A Fermi-paradox metaphor for coordination failure

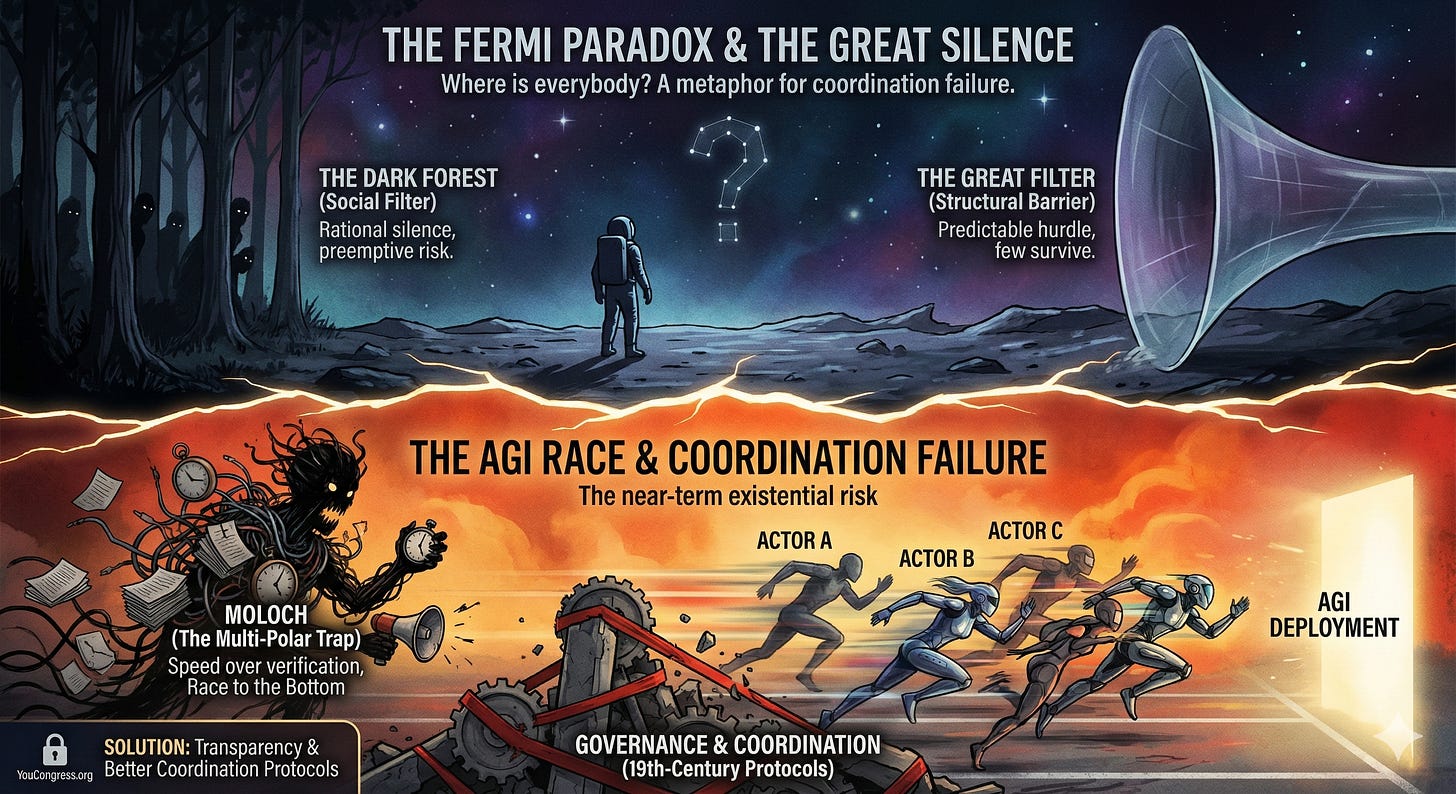

When physicist Enrico Fermi famously asked, ‘Where is everybody?’ he was highlighting a fundamental probabilistic tension: the high likelihood of extraterrestrial civilizations versus the total lack of evidence for them.

The galaxy is old enough that we might expect to have observed signs of advanced technology such as large-scale energy harvesting. Yet, we encounter only a ‘Great Silence.’ As we approach Artificial General Intelligence (AGI), this paradox shifts from an astrobiological curiosity to a warning sign for our own survival.

The fact that the sky is quiet may mean something crucial: Unaligned, expansive AGI is not the default outcome of technological civilizations. The argument here isn’t that AI kills us, it’s that we fail to coordinate around it.

So what explains the silence?

Theory 1: The Dark Forest (A Social Filter)

One explanation is the so-called Dark Forest Theory. It posits that in an environment defined by information asymmetry where intentions cannot be verified across light-years, the most rational survival strategy is silence. In this model, any detected civilization is viewed as a potential threat that must be neutralized before it undergoes a “technological explosion.”

This mirrors the classic security dilemma now playing out in multi-polar AI competition: a state of affairs where the fear of a rival’s opaque progress incentivizes preemptive risk-taking rather than cooperation.

Theory 2: The Great Filter

A more structural explanation is the Great Filter: the hypothesis that a predictable hurdle exists which almost no civilization survives. If the Filter is behind us (the rare emergence of complex life), we are a lucky anomaly. If it lies ahead, it implies that technological maturity is inherently unstable.

We often imagine AI-driven extinction via a “paperclip maximizer” consuming the biosphere. However, if unaligned, self-replicating AI were the standard outcome of intelligence, the universe may be crowded with Von Neumann probes harvesting stellar energy. I’m not arguing misalignment is unimportant; rather, the ‘Great Silence’ suggests that civilizations rarely sustain stable trajectories through the transition to superintelligence.

The Real Filter: Coordination

This suggests that the primary near-term existential hurdle isn’t technical capability, but social coordination. We are unlocking technologies with transformative potential (AGI, synthetic biology) while relying on governance structures designed for a 19th-century reality.

There are three primary reasons why this social coordination problem may be more difficult to solve than the technical alignment problem:

1. The Multi-Polar Trap (The Race):

Even if all actors prefer safety, the perceived “winner-take-all” nature of AGI creates a Race to the Bottom. This competitive pressure rewards speed over verification, making a “fast but risky” deployment more likely than a “slow but secure” one.

2. The Vulnerable World Hypothesis

As noted by Nick Bostrom, technological “spin-offs” lower the barrier to large-scale destruction. We face a democratization of catastrophe, where the tools to trigger a systemic collapse become accessible to small groups before the governance protocols to manage them are even drafted.

3. The Complexity Mismatch

Technical engineering problems are “complicated”: they yield to compute and linear logic. Coordination problems are “complex”: they are rooted in human psychology and legacy incentive structures that prioritize short-term national advantage over long-term species stability.

Governance as the Critical Path

If the Great Filter is a failure of coordination, then technical alignment is a necessary but insufficient condition for survival. We are currently optimizing the vehicle’s engine while our regulatory braking system was designed for a slower, industrial-era world.

To pass this “cosmic exam”, we need better coordination protocols. This is the bottleneck YouCongress.org addresses: By making public preferences on policy questions transparent and backed by verifiable sources, we help journalists and policymakers see what experts and citizens actually want, increasing the chance that we solve the coordination problems (Moloch) that currently paralyze us. Races persist because actors systematically misperceive what others would accept. Transparency collapses that uncertainty.

PS. You don’t need to believe in a “superintelligence” to fear a coordination failure. Whether the challenge is AGI, climate change, poverty or housing affordability, the bottleneck is the same: our ability to make good decisions and carry them out at scale. If we improve how we coordinate, we don’t just survive the race, we finally gain the capacity to solve the problems we already have.